Table of Contents

Pet Companion Tutorial

This tutorial will describe how to create your own Vizy application, accessible by browser either locally or remotely via the Internet. If you just want to run the app, go here. After following this tutorial, you will be able to:

- Create a custom Vizy application in Python that you can access from any web browser

- Add live video of what Vizy sees (i.e., your pet)

- Create GUI elements such as buttons to accomplish special things

- Use a TensorFlow neural network to detect your pet and take pictures

- Post images of your pet to the cloud using Google Photos

- Make a treat dispenser for your pet

- Hook up your treat dispenser to Vizy and control it from a web browser

- Share on the Web so you and your pet's other friends can give them yummy treats from anywhere

You will need the following:

- Vizy with a network connection (WiFi or Ethernet)

- Vizy software version 0.1.98 or later. Be sure to update the software if necessary.

- Computer (Windows, macOS, Chrome OS, or IOS tablet)

- Powered speakers (optional)

- Materials for making treat dispenser (optional)

- Some familiarity with Python (not required, but helpful)

You will not need:

- Knowledge of JavaScript, HTML, or CSS (yay!)

Creating a new Vizy application

Creating a new Vizy application is as easy as adding a new directory to the /home/pi/vizy/apps directory and clicking reload on your browser.

Vizy's software will automatically index the apps (including the one you added) and list in the Apps/examples dialog. Super simple!

Adding files to Vizy

Vizy runs a full Linux (Raspberry Pi OS) including file system (files, directories, and such), and we need to access the file system to add and edit some files. This can be done using a browser and Vizy's text editor or by accessing the network file system and using your favorite text editor.

We'll assume you're using Vizy's text editor in this tutorial. If you use your own text editor and the network file system, you shouldn't have any problems following along.

Creating the new directory and new source file

From Vizy's text editor click on the + icon and type apps/pet_companion/main.py in the text box. This will create a new directory pet_companion in the apps directory and a new file main.py:

Inside the editor copy the text below and save:

import threading

import kritter

import vizy

class PetCompanion:

def __init__(self):

# Set up Vizy class, Camera, etc.

self.kapp = vizy.Vizy()

self.camera = kritter.Camera(hflip=True, vflip=True)

self.stream = self.camera.stream()

# Put video window in the layout

self.video = kritter.Kvideo(width=800)

self.kapp.layout = [self.video]

# Run camera grab thread.

self.run_grab = True

threading.Thread(target=self.grab).start()

# Run Vizy webserver, which blocks.

self.kapp.run()

self.run_grab = False

def grab(self):

while self.run_grab:

# Get frame

frame = self.stream.frame()

# Send frame

self.video.push_frame(frame)

if __name__ == "__main__":

PetCompanion()

This code is mostly boilerplate, but it still does something useful – it streams video from Vizy's camera to your browser. The code creates the Vizy server (line 9), and a video window (line 14) and puts it in the page layout (line 15). It creates a thread (line 19) to grab frames from the camera (Line 28) and push them to the video window (line 30). And it runs the Vizy webserver (line 22).

The extra thread is necessary because there are two things happening simultaneously: (1) handling web requests from client browsers and (2) grabbing and pushing frames. You can think of the main thread as the GUI (graphical user interface) thread and the extra thread as the processing thread. This is a familiar design pattern with GUI applications.

Running our new app

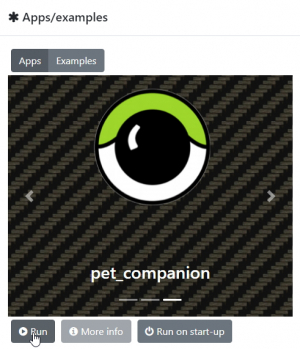

Be sure you click on the save icon  in the editor. Now all you need to do is click on reload on your browser and bring up the Apps/Examples dialog. From Apps you should see pet_companion.

in the editor. Now all you need to do is click on reload on your browser and bring up the Apps/Examples dialog. From Apps you should see pet_companion.

Click on Run to check things out.

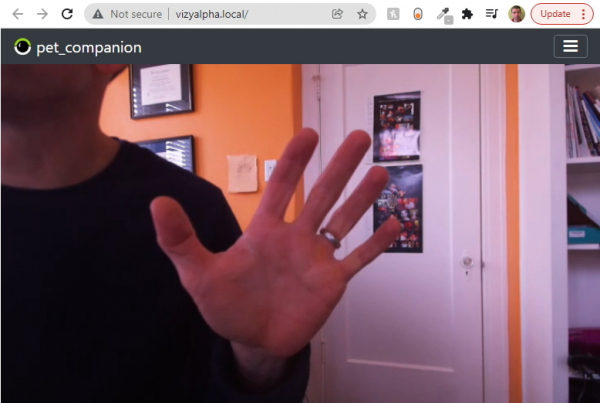

As expected, it's just streaming video from Vizy's camera. The important thing is that we created a new Vizy application. Digging a bit into the anatomy of the application – the top part with the Vizy logo is called the “visor” and takes care of running programs, configuring the WiFi network, setting up cloud access, Web sharing, etc. – system-level stuff. The bottom part with the video window is our application. It's running as a separate server process. You can access it directly at port 5000 – go ahead and point your browser to http://<vizy_address>:5000. What do you see? Even though the application is running within the Vizy framework, it can run as a stand-alone embedded web application.

If your app doesn't run for whatever reason, check out the section about when things go wrong

Adding a "Call pet" button

Let's add a button so we can call our pet over.

class PetCompanion:

def __init__(self):

# Set up Vizy class, Camera, etc.

self.kapp = vizy.Vizy()

self.camera = kritter.Camera(hflip=True, vflip=True)

self.stream = self.camera.stream()

# Put components in the layout

self.video = kritter.Kvideo(width=800)

call_pet_button = kritter.Kbutton(name="Call pet")

self.kapp.layout = [self.video, call_pet_button]

@call_pet_button.callback()

def func():

print("Calling pet...")

We will add the code that does the actual pet-calling later.

Using the App Console

You can bring up the App Console by selecting it in the Settings Menu. From here you can see print messages and/or any errors that your code encounters.

First, be sure to click on your browser's reload button so Vizy reloads the new code. Now click on the Call pet button. You should see the Calling pet… message on the console each time you click the button.

Hooking the button up to something

Of course, we want the button to do something useful, so let's have Vizy play an audio clip each time you press the button. There are lots of ways to do this. Below is code that launches omxplayer (pre-installed) and plays an audio clip. It will require amplified speakers to be plugged into Vizy's (Raspberry Pi's) stereo audio port.

You can supply your own audio clip or you can use someone else's soothing voice to entice your pet to come over to your Vizy camera. Almost all formats are supported including .wav and .mp3. You can copy the audio clip into the pet_companion directory. The easiest way to do this is to bring up a shell and run the command:

wget https://www.pacdv.com/sounds/voices/hey.wav

which gets the audio file onto Vizy, then we can move it into the pet_companion directory:

mv hey.wav ~/vizy/apps/pet_companion

Here's the code:

...

import os

from subprocess import run

class PetCompanion:

def __init__(self):

...

@call_pet_button.callback()

def func():

print("Calling pet...")

audio_file = os.path.join(os.path.dirname(__file__), "hey.wav")

run(["omxplayer", audio_file])

...

It's pretty self-explanatory – we just run omxplayer with hey.wav. There is some extra work to get the absolute path of the audio file (line 12), which allows it to work regardless of which directory is used.

After clicking reload on your browser, you should be able to click on the Call pet button and hear the audio clip played. Nice work!

Adding pet detection

With the ability to call your pet and stream live video, the Pet Companion application might actually be useful, but another feature that might be nice is if Vizy took pictures of your pet and uploaded them to the cloud for you and your friends to view.

In order to do this, Vizy needs to be able to detect your pet, and an effective way to detect your pet is with a deep learning neural network.

The code below adds the neural network detector for all 90 objects in the COCO dataset. When an object is detected, a box is drawn around the object and the box is labeled.

...

from kritter.tf import TFDetector, COCO

class PetCompanion:

def __init__(self):

...

self.kapp.layout = [self.video, call_pet_button]

# Start TensorFlow

self.tf = TFDetector(COCO)

self.tf.open()

...

# Run Vizy webserver, which blocks.

self.kapp.run()

self.run_grab = False

self.tf.close()

...

def grab(self):

detected = []

while self.run_grab:

# Get frame

frame = self.stream.frame()[0]

# Run TensorFlow detector

_detected = self.tf.detect(frame, block=False)

# If we detect something...

if _detected is not None:

# ...save for render_detected() overlay.

detected = _detected

# Overlay detection boxes and labels ontop of frame.

kritter.render_detected(frame, detected, font_size=0.6)

# Send frame

self.video.push_frame(frame)

...

The real work happens on line 25, where we send a frame to the neural network and get back an array of detected objects and their locations in the image. The call to render_detected() (line 31) overlays the detection boxes and labels on top of the image to be displayed.

Note, loading TensorFlow takes several seconds, so our new version will take much longer to initialize. Go ahead and give it a try – point Vizy at your pet, or hold a picture of your pet in front of Vizy. You'll notice that Vizy is detecting more than just pets – people, cell phones, books, chairs, etc. are also detected and labeled.

Note, we're using TensorFlow (regular) instead of TensorFlow Lite because it's a bit more accurate (fewer false positives and false negatives).

The COCO dataset includes dogs, cats, birds, horses, sheep, cows, bears, zebras, and giraffes, but we'll assume that you're only interested in detecting dogs and cats. To restrict things to dogs and cats, we need to filter out the things we're not interested in. This only requires a few lines of code (below).

def filter_detected(self, detected):

# Discard detections other than dogs and cats.

detected = [d for d in detected if d.label=="dog" or d.label=="cat"]

return detected

def grab(self):

detected = []

while self.run_grab:

# Get frame

frame = self.stream.frame()[0]

# Run TensorFlow detector

_detected = self.tf.detect(frame, block=False)

# If we detect something...

if _detected is not None:

# ...save for render_detected() overlay.

detected = self.filter_detected(_detected)

# Overlay detection boxes and labels ontop of frame.

kritter.render_detected(frame, detected, font_size=0.6)

# Send frame

self.video.push_frame(frame)

Uploading pictures of your pet to the cloud

Being able to see pictures of your pet throughout the day is good, you know, so you can make sure they're coping without your reassuring presence. In order to do this you first need to set up Google services if you haven't already.

...

import time

MEDIA_DIR = os.path.join(os.path.dirname(__file__), "media")

PIC_TIMEOUT = 10

PIC_ALBUM = "Pet Companion"

class PetCompanion:

def __init__(self):

...

# Set up Google Photos and media queue

gcloud = kritter.Gcloud(self.kapp.etcdir)

gpsm = kritter.GPstoreMedia(gcloud)

self.media_q = kritter.SaveMediaQueue(gpsm, MEDIA_DIR)

...

# Run Vizy webserver, which blocks.

self.kapp.run()

self.run_grab = False

self.tf.close()

self.media_q.close() # Clean up media queue

def upload_pic(self, image):

t = time.time()

# Save picture if timer expires

if t-self.pic_timer>PIC_TIMEOUT:

self.pic_timer = t

print("Uploading pic...")

self.media_q.store_image_array(image, album=PIC_ALBUM)

...

def grab(self):

detected = []

while self.run_grab:

# Get frame

frame = self.stream.frame()[0]

# Run TensorFlow detector

_detected = self.tf.detect(frame, block=False)

# If we detect something...

if _detected is not None:

# ...save for render_detected() overlay.

detected = self.filter_detected(_detected)

# Save picture if we still see something after filtering

if detected:

self.upload_pic(frame)

# Overlay detection boxes and labels ontop of frame.

kritter.render_detected(frame, detected, font_size=0.6)

# Send frame

self.video.push_frame(frame)

The code above uploads pictures of your pet (or of any cat or dog that happens to be in your house.) The pictures go in an album entitled Pet Companion (line 6) and are uploaded at a maximum rate of every 10 seconds (line 5) to prevent things from from getting too backed up.

Lines 13-15 create a connection to Google Photos and sets up a queue where images are saved to disk while they're waiting to be uploaded. This helps if Vizy's Internet connection is slow and/or has trouble keeping up with the rate of new pictures.

The upload_pic code (23-29) is fairly straightforward. It checks to see if the timer threshold has been exceeded. If it has, it sends another picture to the media queue (self.media_q)

Adding a treat dispenser

Being able to access Vizy remotely and give your pet a treat would be nice, right? Such a feature requires some kind of treat dispenser. Fortunately, making a treat dispenser for your pet isn't too difficult!

After following the assembly and connection instructions, you're ready to add a “Dispense treat” button to your code.

...

class PetCompanion:

def __init__(self):

# Set up Vizy class, Camera, etc.

self.kapp = vizy.Vizy()

self.camera = kritter.Camera(hflip=True, vflip=True)

self.stream = self.camera.stream()

self.pic_timer = 0

self.kapp.power_board.vcc12(False) # Turn off

# Put components in the layout

self.video = kritter.Kvideo(width=800)

call_pet_button = kritter.Kbutton(name="Call pet")

dispense_treat_button = kritter.Kbutton(name="Dispense treat")

self.kapp.layout = [self.video, call_pet_button, dispense_treat_button]

...

@dispense_treat_button.callback()

def func():

print("Dispensing treat...")

self.kapp.power_board.vcc12(True) # Turn on

time.sleep(0.5) # Wait a little to give solenoid time to dispense treats

self.kapp.power_board.vcc12(False) # Turn off

...

This code is very similar to the Call pet code. Lines 22-24 do the actual solenoid triggering.

Share your application on the Web

The Pet Companion application wants to be accessible via the Internet, not just via your local network, so that when you're out and about you can see video of your little guy/gal and give him/her little rewards whenever you like.

Vizy makes it easy to share your applications seamlessly on the Internet. Web sharing requires some easy setup. Setting up a custom domain can make it very straightforward for your friends/family to access Pet Companion and check in on your pet. You can give them a Guest account and password so they can't change things (e.g. network configuration, currently running app, etc.)

Other improvements and ideas

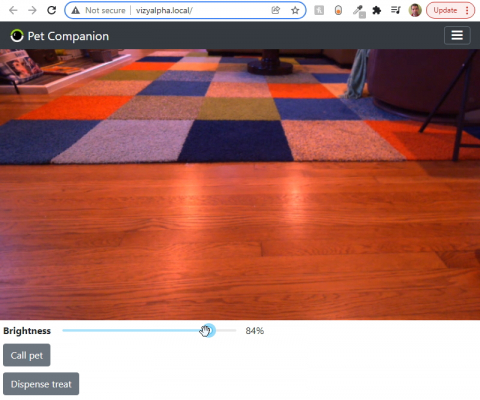

Brightness slider

Vizy's camera will do it's best to create images with a reasonable amount of brightness, but many times the lighting is insufficient, in which case being able to increase the brightness is very useful.

...

class PetCompanion:

def __init__(self):

...

# Put components in the layout

self.video = kritter.Kvideo(width=800)

brightness = kritter.Kslider(name="Brightness", value=self.camera.brightness, mxs=(0, 100, 1), format=lambda val: '{}%'.format(val), grid=False)

call_pet_button = kritter.Kbutton(name="Call pet")

dispense_treat_button = kritter.Kbutton(name="Dispense treat")

self.kapp.layout = [self.video, brightness, call_pet_button, dispense_treat_button]

...

@brightness.callback()

def func(value):

self.camera.brightness = value

...

The slider component takes several arguments: mxs provides the minimum, maximum, and step size of the slider, format passes in a lambda (inline) function to format the value of the slider with a % symbol, grid=False indicates that we want the component to not line up in a grid (grids are useful when you have multiple components that you want to all line up together on the page.)

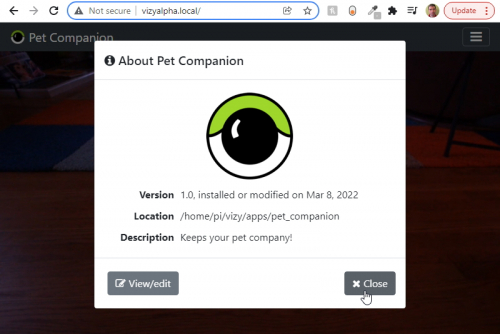

Adding info.json

You can add an info.json file to an application to indicate various things such as name, version, author, description, etc. Without an info.json file, Vizy will make educated guesses.

Add the info.json file by clicking on the + icon in the text editor and typing apps/pet_companion/info.json.

{

"name": "Pet Companion",

"version": "1.0",

"description": "Keeps your pet company!"

}

You'll need to click reload on your browser as well as restart the application to get the new name “Pet Companion” instead of “pet_companion”. The About and Apps/examples dialogs will also change slightly to reflect the new information.

There are other fields, such as author, email, files, and image that can provide more useful information about the application. For an example of how these are used, check out the info.json file for MotionScope.

Ball launcher

Being able to give your pet treats remotely is pretty great… being able to play fetch with your pet is even better. You can make a robotic fetch machine/ball launcher (although we haven't found a good guide for this), or you can buy one. Making Vizy control these fetch machines is part of the fun (we think). Hopefully, this guide has helped convince you that it isn't too difficult!

So imagine yourself standing in line at the grocery store and instead of texting your human buddies, you bring up Pet Companion, call your little guy/gal over, give them a treat, and engage in a quick round of fetch, then give them some more treats for good measure.

Auto-treat

Consider adding an “auto-treat” option by using the treat dispenser and the TensorFlow detection of your pet. For example, the auto-treat could be triggered when your pet sits in a particular place for an extended period of time, like his/her crate (put the treat dispenser where he/she can reach it within the crate). This could help with crate training, for example.

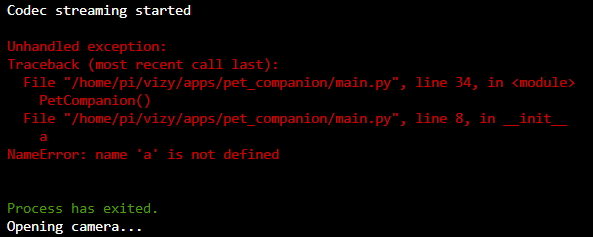

When things go wrong

Bugs and writing software go hand in hand. If Pet Companion doesn't do what it's supposed to do, check the App console for errors. Depending on the issue, it might print an error but keep on going, or the issue might cause the process to exit entirely:

Or your program can stop without exiting (i.e. hang). You can press ctrl-c inside the App console to interrupt and possibly terminate the process just as if you were running the app from a shell. (You can do this to a normally running application and it will restart.)

It's often useful to have the App console visible while developing code so you can catch errors when they happen.

Just show me the app

You can find a more or less complete version of Pet Companion in the Vizy Examples – that is, bring up the Apps/examples dialog, choose Examples and browse to Pet Companion. If you can't find Pet Companion in the Examples, make sure you're running version 0.1.98 or greater, and update if necessary.

The source code can also be found here.

Wrapping up

You've just made an application with video streaming, deep learning, cloud communication, interactivity, IOT and robotics – nice work! Give yourself a pat on the back for being such a dedicated pet owner. Your pet would totally give you a treat if he/she could!